Each of the Salesforce developers has probably come across Salesforce governor limits and the problems they trigger. However, it’s not the concept of limitation that causes the issues (after all, life is tough, and we all know it), but the fact that they are quite hard to control.

Nonetheless, if truth be told, you can try to control some of the governor limits when writing the code. For instance, you can keep track of the number of SOQL queries, although this is not always possible. Besides, you don’t have to be a genius to come up with a case, in which the queries will be generated automatically, thus making it very hard, if not impossible at all, to predict their number. Let alone memory heap size or CPU time!

Recently, at Softheme, we received a request to finalize one project. An application, to be more specific. A very well-written application, with neat and convenient interface, structured code, and altogether easily readable and logically organized. And yet, as it turned out, some problems just remained beneath the surface, and after a month of working with the application they just appeared out of nowhere. What sort of problems, you will ask me? The issues with exceeding the governor limits! When the application was under construction, no one could have predicted anything of such kind. Once we conducted an in-depth analysis of this application, we realized that the entire business logic needs to be created anew. Likewise, some changes to the UI were essential to solve this problem. As for the expenses, the price for resolving all of these issues could be compared to the cost of developing a new application from scratch. This intrigued me, so I decided to investigate this situation a bit more, and started consulting various developers from all around the world. As a result, I discovered that this case is by far not unprecedented. Surely, finding a solution is not always this pricey. Nevertheless, project managers can rarely go home without patches of hair missing, while developers can be proud of their panda eyes upon finding the way around this problem.

So what can you do to avoid the problem I’ve discussed? The answer is obvious - analyze the requirements! Still, as you can learn from the practice, quite often the teams are so concentrated on functional completeness and its aspects, that the problems with performance and exceeding governor limits (which we came across) are only likely to be covered superficially, if covered at all.

This way, I’d suggest taking the following pathways to get past the issue with governor limits:

- Data modeling. You can generate synthetic data that will allow you to estimate the number of queries and the size of the data. It is always best to put some effort into it, as the character of the connection between the synthetic data should correspond, or at least be very similar to that in the real life. Speaking of which, the situation on the project I mentioned above was almost identical: everything worked fine on the synthetic database with the specified size, yet the character of the connection between the entities in the real life made significant adjustments!

- Using data sanation. I highly suggest you develop an application for the sanation of the user’s real data, which you could use in the process of development. Typically, it’s a lot easier and faster than running an adequate modeling. Let alone the fact that this approach may help you find some other problems in the application, which have nothing to do with governor limits.

Hence, when debugging an application using the pre-set data, you first need to know what exactly triggers the problem. So where do we go to look? Well of course, in the log files! Detailed Salesforce logs contain tons of useful information on using the governor limits.

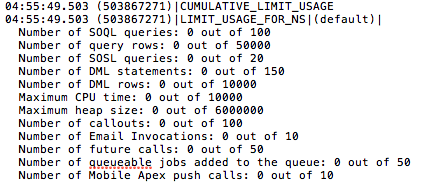

First off, it’s the summary.

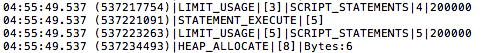

Second, it is the notifications about limit usage:

Naturally, I understand that each of you can find the problem with governor limits in your code without any logs. However, come to think of the cases when triggers come into play (in most unexpected places), or new workflows appear (urgently created by the administrator before before you had time to notice it), or something similar - then log analysis does not seem as a useless toy any more. Instead, it is brought to the forefront as a highly useful tool.

We could have stopped at this point, have we forgotten about the size of the log files and how much fun it is to work with them! Remember this image?

Let me help you recall it. In one of my previous articles I wrote:

“On the maximum log detalization level, the file size for 4 primitive unit tests will exceed 2000 lines. 2000 lines, Carl! This is what one of our developers looked like when he saw a 2Mb log file (to get things clear - it had over 20.000 lines it). He knew the answer is definitely in there somewhere, but even the thought about looking for it in the file this large brought the depicted grimace onto his face.”

At the moment, The Welkin Suite team is working on the profiler based on the log files. The main idea behind this application is to allow users run the functionality multiple times (for instance, Anonymous Apex or Unit Tests), gather the information about the average time spent on the execution of the operation, and most importantly, collect the data about using the governor’s limits and building a graph of their utilization. We will go deeper into this topic sometime in future.

I would love to hear your thoughts about this tool! Do you have any expectations about what it should or should not do? Maybe some of you are faced with similar issues, and thus feel a grave necessity for such a tool? Please share in the comments! I would be more than glad to discuss it with you.

Your comment may be the first